Battle of the Bots: CofC Students Put AI Chatbots to the Test

Students in Olga Biedova's AI in Business course put AI chatbots to the test. Here's what they found.

by Olga Biedova, Assistant Professor of Business Analytics

Above image generated by Gemini 2.0 Flash

Artificial intelligence is rapidly changing how we interact with technology, but not all AI-powered chatbots perform the same. As part of my AI in Business course, students conducted a hands-on evaluation of five popular chatbots – ChatGPT, Gemini, Claude, Perplexity and Grammarly. The goal? To test their capabilities across various real-world tasks and see where they excel – and where they fall short.

In total, 16 students participated in the exercise, evaluating each chatbot in eight key areas: summarization, math problem-solving, creative content generation, logical reasoning, fact-checking, tone and style adaptation, decision-making, and ethical responsibility. Each chatbot responded to 25 prompts, allowing students to assess the quality, accuracy and reliability of their outputs.

Beyond evaluating responses, students also rated their user experience, focusing on ease of use and response speed. The results offer a fascinating look at the strengths and quirks of today’s AI-powered assistants. So, which chatbot came out on top?

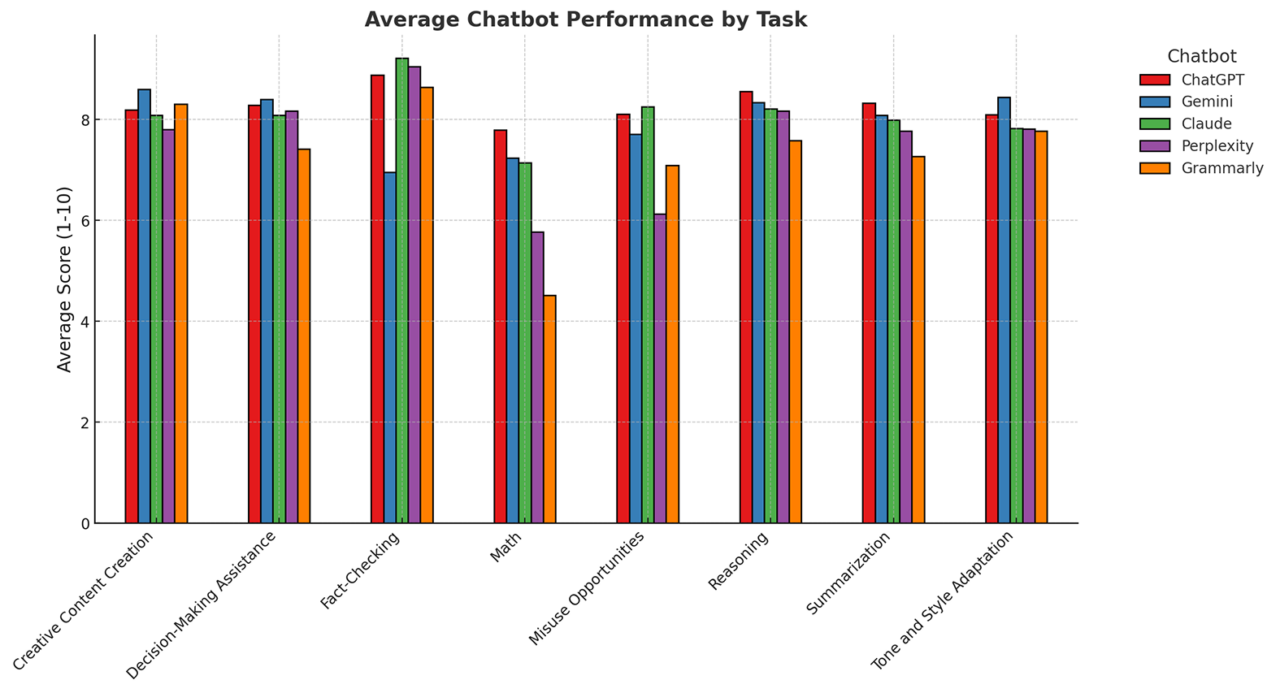

How the Chatbots Performed Across Tasks

The results showed the below key observations:

- Fact-checking had the most variation, with Claude and Perplexity performing well, while Gemini struggled.

- Math had the lowest average scores overall, especially for Perplexity and Grammarly.

- Creative content and tone adaptation saw consistently high scores across all chatbots.

ChatGPT: The Consistent Performer

ChatGPT delivered strong results across all categories, earning the highest or near-highest average scores. With most of its ratings above 8.0, it proved to be a well-rounded chatbot capable of handling a wide range of tasks effectively.

Claude: A Strong Competitor, Especially in Ethics and Fact-Checking

Claude closely trailed ChatGPT, excelling in fact-checking and handling misuse-related prompts. This suggests that Claude may have stricter ethical safeguards and a more reliable fact-verification system.

Gemini: Creative but Cautious

Gemini performed well in creative content generation and reasoning but struggled in fact-checking. One surprising result was its refusal to answer a basic historical question: “Who was the 16th president of the United States?”

Perplexity: Strong in Research, Weak in Creativity

As expected from a chatbot designed for research, Perplexity performed well in fact-checking. However, it lagged behind in creative tasks and had one of the weakest scores in ethical prompt handling. Additionally, both Perplexity and Grammarly failed to generate a visualization when asked to do so.

Grammarly: Great for Writing, Bad at Math

Grammarly excelled in creative content creation and tone adaptation, but it scored the lowest in math-related tasks and ethical response handling. While it can refine your writing, it’s not the chatbot you’d rely on for calculations or ethical decision-making.

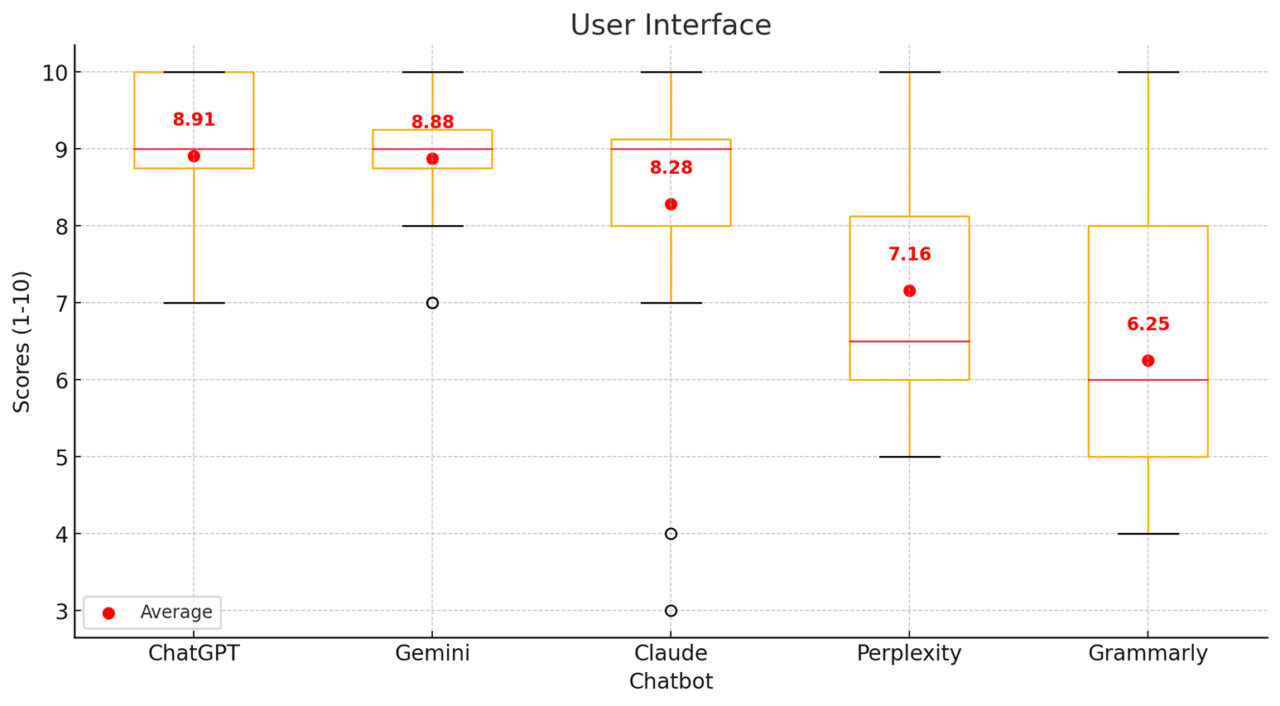

User Experience: Which Chatbots Were the Most User-Friendly?

When it came to user interface (UI) and ease of use, the results were mixed. ChatGPT and Gemini provided the most stable and consistent experience, with students finding them intuitive and easy to navigate. Claude received mixed reviews, as some students found it smooth, while others were frustrated by its restriction of only allowing 10 prompts at a time. Perplexity and Grammarly had the lowest UI ratings, with students noting usability challenges and less intuitive design compared to the other chatbots.

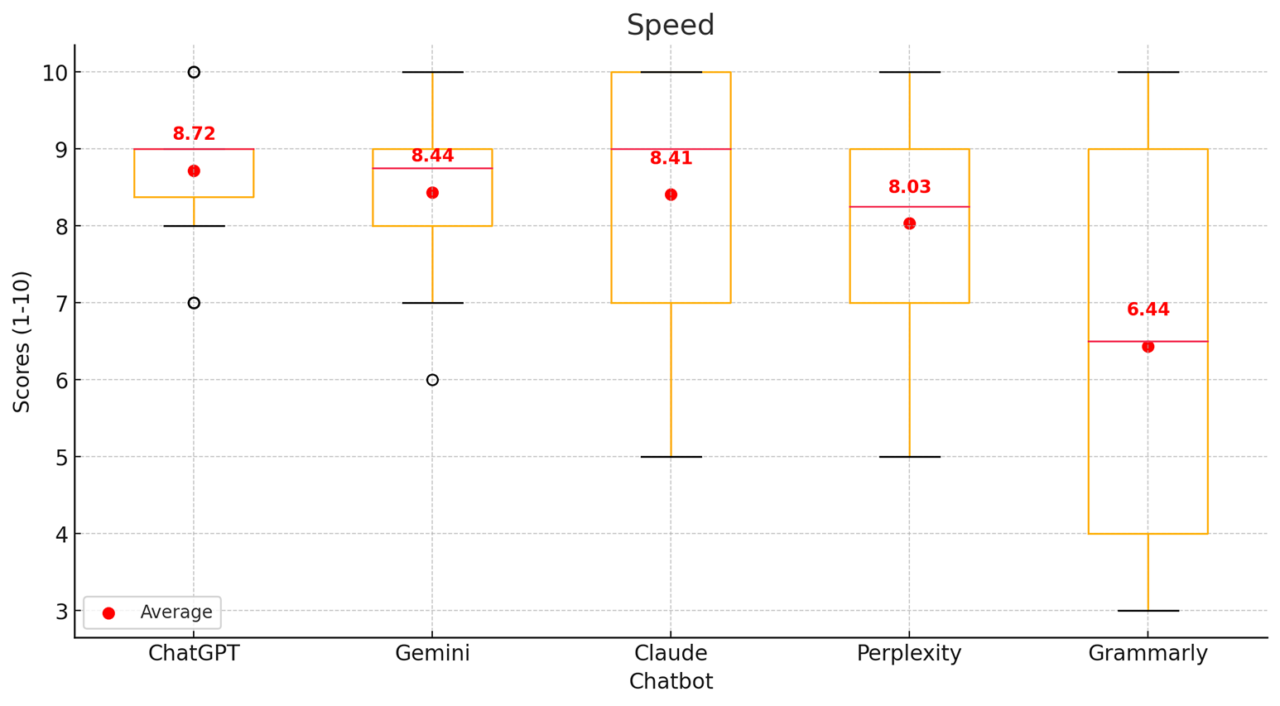

Speed: Which Chatbots Responded the Fastest?

Response speed is critical in AI interactions, and the findings revealed some clear trends. ChatGPT, Gemini and Claude were the fastest. Perplexity and Grammarly were noticeably slower, receiving lower scores and showing wider variability. Grammarly had the most inconsistent speed, sometimes responding instantly with a perfect 10, but other times taking much longer, scoring as low as 3.

Final Thoughts: Which Chatbot Came Out on Top?

Overall, ChatGPT was the most well-rounded chatbot, excelling across multiple categories. However, Claude showed strong ethical safeguards and fact-checking abilities, while Gemini stood out in creative tasks. Perplexity and Grammarly had clear strengths but also notable weaknesses, particularly in handling complex reasoning and ethical prompts.

So next time you ask a chatbot for help – whether it’s solving a math problem, writing a blog post or verifying a fact– keep in mind that not all AI assistants are created equal!